How to see what’s coming with lidar

Without the suite of sensors, self-driving vehicles wouldn’t even be able to leave the car park. Here’s why lidar is so important

You can’t get away from them, so you’d better start taking note of autonomous vehicles and the technologies that make them possible.

We haven’t quite reached the point where cars can fully drive themselves, but these vehicles are now developing at quite some pace. And as these autonomous systems find their way into more and more vehicles, they’ll have an even greater impact on our working lives.

Of course, many people in the motor industry will already be familiar with cameras, radar and ultrasonic sensors; after all, they already feature in everything from parking aids to adaptive cruise control. However, fewer people will have encountered

lidar. Let’s get to know this key bit of autonomous tech.

What is lidar?

Lidar (or light detection and ranging, to give it its full name) is also known as laser detection and ranging, laser scanning or laser radar. Just like radar, it’s a sensing method that detects an object’s position and its distance from the sensor. An optical pulse is transmitted, and the reflected signal is measured as it returns. The time delay on this pulse can vary from a few nanoseconds (ns) to several microseconds (μs).

Most lidar systems use direct time-of-flight measurement. A discrete pulse is emitted, and the time difference between the emitted pulse and the return echo is then measured. This can be converted to a distance because the speed of light is a known constant.

Time-of-flight measurement is a very reliable option for the contact-free measurement of distances. Light is transmitted from a laser diode in set patterns, and data is extracted based on the reflections received by a detector. Return pulse power, round-trip time, phase shift and pulse width are common methods used to extract information from the signals.

A very high resolution is possible with lidar thanks to some of the properties of infrared light, which has a short wavelength of between 0.9 and 1.5mm. This means that minimal processing is required to generate extremely high-resolution 3D images of nearby objects. Radar, by contrast, has a wavelength of 4mm at 77GHz, making it difficult to resolve small features, especially at a distance.

Solid-state lidar and radar both have an excellent horizontal field of view (FOV). However, mechanical lidar systems mounted on top of the vehicle possess the widest FOV of all because of their 360º rotation. One drawback with mechanical lidar systems, though, is that they tend to be quite bulky. They have become smaller over time, but there’s a general shift in the industry towards solid-state lidar devices, particularly given that the prices for these sensors have dropped considerably in recent years. It’s likely that a solid-state lidar module could cost less than £200 by 2022.

Choices, choices…

Beyond solid-state and mechanical, there are three main types of lidar sensors: flash, electro-mechanical and optical phased array. The operation of a flash lidar is similar to a digital camera using its flash setting. A single large-area pulse lights up the environment ahead (using invisible light frequencies).

An array of photodetectors then captures the reflected light. Each detector is able to determine the distance, location and reflection intensity. Because this method takes in the entire scene as a single image, the data comes in much faster.

Also, as the entire image is captured in a single flash, this method is less vulnerable to vibrations that could distort the picture over time. One problem with this method is that reflectors on other vehicles can end up reflecting too much of the light back, rather than allowing some of it to scatter. This can overload the lidar sensor. A further disadvantage is the high laser power required to illuminate the scene to a suitable distance.

Far more common than flash lidar is the narrow-pulsed time of-flight method used within a micro-electro-mechanical system (MEMS). In this setup, mirrors are used to steer sequential laser beams. The angles of these mirrors can be changed by applying a voltage.

Moving the beam in three dimensions requires several mirrors arranged in a cascade. The alignment process is not simple and can be affected by vibrations. Automotive specifications start at around -40°C, which can be a difficult operating environment for a MEMS device. Developments are ongoing, but it is likely that MEMS lidar will end up being the sensor of choice for many manufacturers.

Optical phased arrays are a more recent, fully solid-state alternative to MEMS devices. Electronic signals are used to adjust the angles of the sequential laser beams, rather than mechanically operated mirrors. This could theoretically eliminate some of the operating constraints experienced by MEMS sensors, but further development is required.

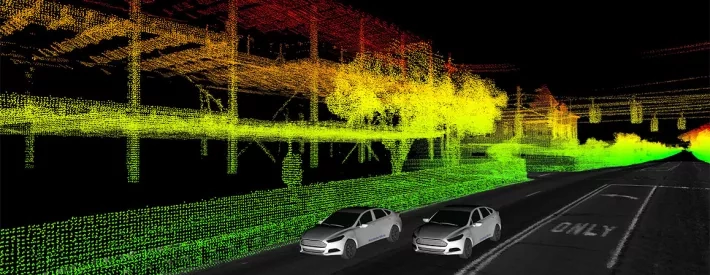

Once all the data has been captured by the lidar sensors, it’s processed to produce a point cloud, effectively a 3D map of the vehicle’s surroundings. This data must be processed very quickly so the car can decide on a path that’s safe to take. In some circumstances, even over a short period, many terabytes of data can be collected.

That’s the next stage in autonomous driving: processing. As more sensors appear on vehicles, more data will be collected. That means more for the car to analyse so that it knows if it can continue on its journey or if it needs to take evasive action.